Fine-Tune the GPT-2 Model On the works of Shakespeare

GPT-2

GPT-2 (Generative Pre-trained Transformer 2) is a large-scale language model developed by OpenAI.

GPT-2 is designed to generate human-like text by predicting the next word in a sequence of words, based on the words that came before it. It uses a type of deep learning called transformer architecture, which allows it to understand and model the complex relationships between words and their context.

Fine-tuning

Fine-tuning a GPT-2 model refers to the process of taking a pre-trained GPT-2 model and training it further on a specific task or dataset to improve its performance on that particular task.

Let’s say you work for a customer support company that receives a large number of customer inquiries via email. Your job is to develop an automated system that can understand and respond to these inquiries, in order to reduce the workload of your customer support team.

One way to approach this problem is to use a GPT-2 model that has been fine-tuned on a dataset of customer inquiries and corresponding responses. The idea is to train the model to generate appropriate responses based on the content of the incoming emails.

Preprocessing Data

- Creating a Tokenizer: A tokenizer is a tool that splits text into individual tokens (words, punctuation marks, etc.) that can be used as input to a machine-learning model. You can use various tokenizers such as WordPiece tokenizer, Byte Pair Encoding (BPE) tokenizer, or SentencePiece tokenizer.

#pip install transformers

from transformers import AutoTokenizer

import tensorflow as tf

# create the tokenizer

tokenizer = AutoTokenizer.from_pretrained('bert-base-uncased')

# tokenize the sentence

text = "All the world's a stage, and all the men and women merely players."

tokens = tokenizer.tokenize(text)

tokens['all', 'the', 'world', "'", 's', 'a', 'stage', ',', 'and', 'all', 'the', 'men', 'and', 'women', 'merely', 'players', '.']2. Encoding the Text: Encoding is the process of converting the text into a numerical form that can be fed into a machine learning model. In the case of NLP, this typically involves converting each token into its corresponding integer index.

# encode the tokens

input_ids = tokenizer.convert_tokens_to_ids(tokens)

print(input_ids)[2035, 1996, 2088, 1005, 1055, 1037, 2754, 1010, 1998, 2035, 1996, 2273, 1998, 2308, 6414, 2867, 1012]3. Batching the Data: Batching is the process of grouping the encoded text into batches of a fixed size. This is done to speed up the training process and make the best use of available computational resources. Here’s how to batch the encoded data:

# batch the encoded text

batch_size = 1 # set the batch size to 1 to create a single example

dataset = tf.data.Dataset.from_tensor_slices((input_ids, attention_mask, token_type_ids))

dataset = dataset.batch(batch_size)Hugging Face Transformers

Let’s say you work for a social media monitoring company that helps businesses track mentions of their brand on social media platforms. Your job is to develop a machine-learning model that can classify social media posts as either positive or negative, based on the sentiment expressed in the post.

One way to approach this problem is to use the Transformers library from Hugging Face, which provides a wide range of pre-trained models for natural language processing tasks, including sentiment analysis.

Writing a Python application using FastAPI

FINE-TUNE GPT-2

Here are the general steps you can follow to fine-tune a GPT-2 model using the Shakespeare Dataset:

- Load the Shakespeare Dataset using the

datasetslibrary. - Preprocess the data by creating a tokenizer, encoding the text, and batching the data.

- Load a pre-trained GPT-2 model using the

transformerslibrary. - Set up the training loop, including defining the optimizer and loss function.

- Train the model on the Shakespeare Dataset.

- Generate some text using the trained model.

import os

import random

import torch

from transformers import GPT2Tokenizer, GPT2LMHeadModel, AdamW, get_linear_schedule_with_warmup

# set random seed for reproducibility

random.seed(42)

torch.manual_seed(42)

# load text file as dataset

with open('/content/shakespear.txt', 'r', encoding='utf-8') as f:

text = f.read()

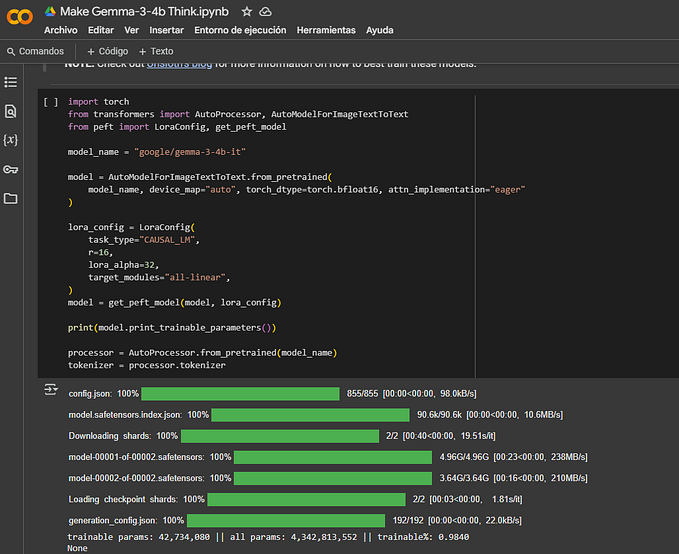

# initialize GPT2 tokenizer and model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

# set device to GPU if available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# tokenize text and convert to torch tensors

#input_ids = tokenizer.encode(text, return_tensors='pt').to(device)

input_ids = tokenizer.encode(text, return_tensors='pt', max_length=512, truncation=True).to(device)

# set training parameters

train_batch_size = 4

num_train_epochs = 3

learning_rate = 5e-5

# initialize optimizer and scheduler

optimizer = AdamW(model.parameters(), lr=learning_rate)

total_steps = len(input_ids) * num_train_epochs // train_batch_size

scheduler = get_linear_schedule_with_warmup(optimizer, num_warmup_steps=0, num_training_steps=total_steps)

# train the model

model.train()

for epoch in range(num_train_epochs):

epoch_loss = 0.0

for i in range(0, len(input_ids)-1, train_batch_size):

# slice the input ids tensor to get the current batch

batch_input_ids = input_ids[i:i+train_batch_size]

# create shifted labels for each input in the batch

batch_labels = batch_input_ids.clone()

batch_labels[:, :-1] = batch_labels[:, 1:]

# set label ids to -100 for padded tokens

batch_labels[batch_labels == tokenizer.pad_token_id] = -100

# clear gradients

optimizer.zero_grad()

# forward pass

outputs = model(input_ids=batch_input_ids, labels=batch_labels)

loss = outputs[0]

# backward pass

loss.backward()

epoch_loss += loss.item()

# clip gradients to prevent exploding gradients problem

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

# update parameters

optimizer.step()

scheduler.step()

print('Epoch: {}, Loss: {:.4f}'.format(epoch+1, epoch_loss/len(input_ids)))

# save the trained model

output_dir = './results/'

if not os.path.exists(output_dir):

os.makedirs(output_dir)

model.save_pretrained(output_dir)

tokenizer.save_pretrained(output_dir)A detailed explanation of the code:

Here’s a detailed explanation of the code:

- The first line of the code imports the

osmodule, which provides a way of using operating system-dependent functionality like reading or writing to the file system. - The second line imports the

randommodule which provides functions for generating random numbers. - The third line imports the

torchmodule which provides tensor computations with strong GPU acceleration. - The fourth line imports several functions from the

transformersmodule, which is a library for state-of-the-art natural language processing (NLP) models. - The next two lines set the random seed for reproducibility.

- The

open()the function is used to open a file namedshakespear.txtwhich contains text data. Therparameter tells Python to open the file in read mode andencoding='utf-8'specifies the character encoding to be used. The text data is then read and stored in a variable calledtext. - The

GPT2TokenizerandGPT2LMHeadModelclasses are initialized from thetransformersmodule. These classes are used to tokenize the text data and train a language model, respectively. - The

devicethe variable is set to use the GPU if available, otherwise the CPU. - The

input_idsthe variable is set to tokenize the text data using thetokenizer.encode()method. Thereturn_tensorsparameter is set to'pt'to convert the output to PyTorch tensors, andmax_length=512andtruncation=Trueare set to limit the length of the tokenized sequence to 512 tokens. - The training parameters are set with a batch size of 4, 3 epochs, and a learning rate of 5e-5.

- The optimizer and scheduler are initialized with the

AdamWoptimizer and a linear learning rate schedule with a warm-up. - The

model.train()the method is called to set the model to training mode. - The code then loops through each epoch and each batch of training data. In each epoch, the loss for each batch is calculated and the gradients are computed and used to update the model parameters using the

optimizer.step()method. The learning rate is adjusted using thescheduler.step()method. The average loss for the epoch is printed. - The trained model and tokenizer are saved in the

./results/directory, which is created if it does not exist, using themodel.save_pretrained()andtokenizer.save_pretrained()methods.

Overall, this code trains a GPT-2 language model on a text dataset and saves the trained model and tokenizer for later use.